- TORCH NN SEQUENTIAL GET LAYERS INSTALL

- TORCH NN SEQUENTIAL GET LAYERS MANUAL

- TORCH NN SEQUENTIAL GET LAYERS CODE

I have also trained the model in the CPU below are the results.ĭo like, share and comment if you have any questions. In the notebook we can see that – training the model in GPU – the Wall time: 2min 40s. When i load Pytorch fx quantized model to TVM like below code: import torch from torch.ao.

TORCH NN SEQUENTIAL GET LAYERS CODE

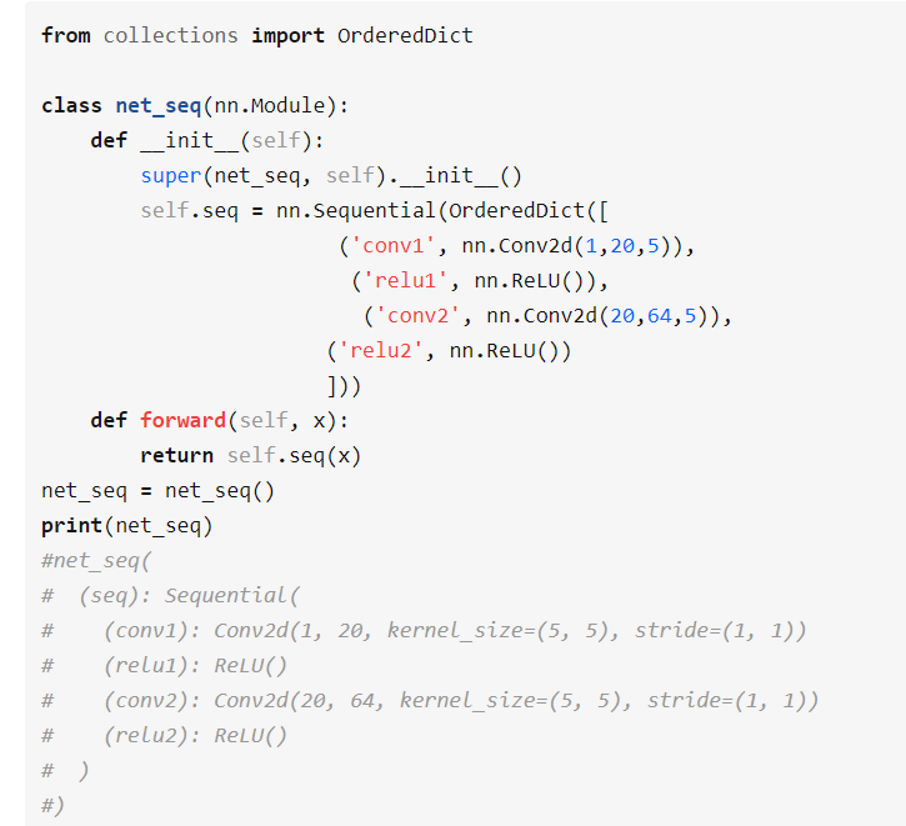

We will go through the code implementation. To provide this facility and to avoid retraining the model every time, we have the functionalities available in Pytorch to save and load model. Once you train your model and get the desired results, you want to save the model and using this model you would want to make predictions. We will see a code implementation of this process. Choice is yours how you wanna build the NN architecture – Here the modules will be added to it in the order they are passed in the constructor. Torch.nn has another handy class we can use to simply our code: Sequential.If you are familiar with Keras, this implementation you will find very similar. Follow this tutorial to do the set up in Colab.

TORCH NN SEQUENTIAL GET LAYERS INSTALL

In case you a GPU, you need to install the GPU version of Pytorch, get the installation command from this link.ĭon’t feel bad if you don’t have a GPU, Google Colab is the life saver in that case. If all the params required to initialize a module are either inferred, or provided in a dict of kwargs, it will deduce an output shape, and infer _init_ arguments for a next module.If you have gone through the last three posts of this series, now you should be able able to define the architecture of Deep Neural Network, define and optimize loss, you should also be now aware of few of over-fitting reduction techniques like – compare validation/test set performance with training set, addition of dropout in the architecture.Īlong with the ease of implementation in Pytorch, you also have exclusive GPU (even multiple GPUs) support in Pytorch. The modified nn.Sequential class then will initialize as following: for every tuple in a sequence it will infer params to initialize a corresponding module. The modified nn.Sequential class would also require expected_input_shape argument. I would like it to accept not only a sequence of nn.Modules, but also a sequence of either nn.Modules, or tuples of class and dict of kwargs, e.g. number of input channels of nn.Conv2d is inferrable from input shape).Īfter that, I would like to extend an nn.Sequential class. Also, I need a method (let's call it infer_init_params) that would take an input shape and return a dict with arguments of _init_, that are inferrable from this input shape (e.g. My idea is to first of all, implement a method infer_output_shape in every class inherited from nn.Module, which would take an input shape and return an output shape.

in nn.Sequential container, it seems possible and would be quite handy. However in the case, when the order of layers is predefined, e.g. Of course, it is a consequence of a dynamic-graph paradigm. In this case one require to look into documentation page for exact formula.

In case of the torch.nn.Sequential module, each module tree inside the sequential module must lie completely within one partition for activation checkpointing to work. in conv layers with non-default padding / output_padding / stride. For all torch.nn modules except torch.nn.Sequential, you can only checkpoint a module tree if it lies within one partition from the perspective of pipeline parallelism.

TORCH NN SEQUENTIAL GET LAYERS MANUAL

Inferring shapes of subsequent layers require manual calculations. A modification of nn.Sequential class that would infer some input parameters for containing modules.

0 kommentar(er)

0 kommentar(er)